Can a fish drown? Where are my kidneys? Timeless questions that we have all asked Google. Okay just me then. But a significant number of people have searched these terms – and as a result, Google Autocomplete will expand your search box to show you search suggestions like these.

Can a fish drown? Where are my kidneys? Timeless questions that we have all asked Google. Okay just me then. But a significant number of people have searched these terms – and as a result, Google Autocomplete will expand your search box to show you search suggestions like these.

While this feature can bring up some strange and often confusing results, it can be hilarious, shocking and disturbing – but most of all, it can be educational in good and bad ways.

How Do I Google Something?

Google Suggest was first introduced in 2004 to give users a list of potential search terms based on their results. Fast forward almost ten years – the feature now works as letters are typed internationally, locally and now backed up with Google Instant. It is super helpful, especially on mobile devices, where typing on a touch screen is significantly less fun than typing on a keyboard. It makes the search process faster and corrects the typos most commonly made on touch screen keyboards – and it’s surprisingly only very rarely annoying.

Sometimes though, it is confusing, funny or downright offensive. “Autocomplete fails” and the blog fodder they have provided over the years are a testament to a few things: firstly, automating everything isn’t good. Secondly, people search for some weird stuff. Thirdly, censorship is not necessarily a bad thing.

Autocomplete – How Does it Work?

Google says that autocomplete is a reflection of collected search activity from around the web, mixed with the content of pages indexed by Google. It also adds in signed in users’ search history for good measure, to help them find those old forgotten gems or retrace their searching steps, but this can be turned off – and it’s not the thing I’m really going after here.

The kind of weird and wonderful results we often get subjected to are apparently what other people have typed into that box in the past – but this post from Rishi Lakhani explains that things (as always) aren’t that simple. Still, he shows a famous example of how this has been manipulated in the past using outsourced, bogus search volume alone. The suggestions are determined by an algorithm, which uses several factors and filters out violent, hateful or pornographic terms (with some very severe oversights, as I have discovered).

Popularity seems to be the key to the autocomplete feature, which makes sense – it helps develop a social element to searching and to identify popular events and themes. It also conveniently passes the blame for any offensive or misguided content in the suggestion window off to we, the people.

But I wonder – how influential is it on users – would you select a suggested result over what was in your mind? Probably, if it was weird or shocking enough. But is that really what Google wants to do? Distract users from finding what they want, to lead them instead down another path altogether?

The Power of Suggestion

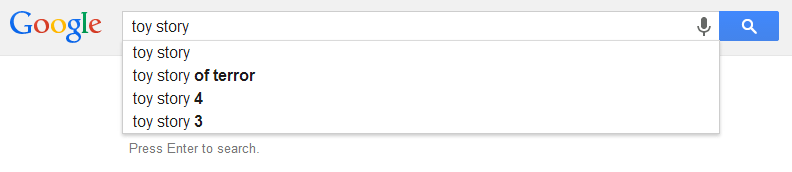

How often do you find yourself clicking suggested results, or cutting a search short with Google Instant? It can be a brilliant way to discover things you had never seen before. For example, I decided to search for Toy Story, the Pixar classic:

Among the suggestions is “Toy Story of Terror” – which at the time of writing has yet to air. I didn’t know anything about this before I made the search. It took me away from my intended search entirely – but I discovered something brand new. Just as YouTube has become legendary for taking you a million miles from your original search, Google’s Autocomplete is offering a path to discovery. Is this a good thing, or is it just distracting us from what we really want?

Once you’ve discovered something new, do you care about your original query any more?

Is Google Autocomplete Promoting Negativity?

There are numerous alleged methods for manipulating autocomplete for increased traffic and profit, and several theories about how social mentions can affect it – but I’m not going to go into that, mainly because it’s filled with risk. I want to focus more on how it can defer a user, misrepresent the public and affect our perceptions of each other. Negativity can draw the eye with far more force than a benign, standard issue keyword – and the evils of the autocomplete box can be disturbing. A potentially harmless search for something most people might want to have answers to can deliver shocking suggestions.

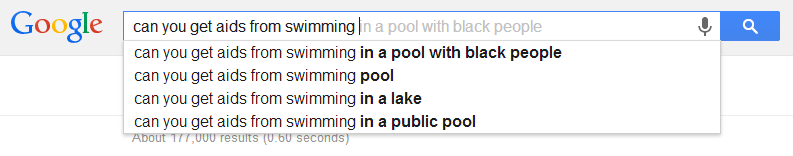

Which suggestion stands out as completely out of context here?:

Regardless of how popular a term may be, should it really be there? While the first half of the example question above (although somewhat misguided) is legitimately something all people could at some stage wonder about and look for answers to, the second (suggested) half seems to violate Google’s own house rules.

Google has in the past sponsored ads against antisemitism in search results, which I had witnessed myself while searching for (completely benign) user generated PPC terms. I’ve never been able to repeat those results since, suggesting a change of stance on the matter.

Make what you will of the screen shot above, but I feel that allowing it to pass after a campaign to remove offensive, misguided search results is a tad hypocritical. BUT above all else, it’s taking a much skewed snapshot of society by indirectly endorsing and unintentionally promoting the views of only some. The danger is that this narrow field of view gains momentum and portrays itself as what everyone is thinking – because it’s not what everyone is thinking.

It does not represent the question posed. It does not represent the people at large. Sexism has been highlighted recently in a set of ads from UN Women, using real suggested searches. This gets the point across brilliantly – this is public perception, this is how women are still viewed by some in this day and age, we’re all to blame for the perpetuation of stereotypes and negative association.

Sure, it’s a great way to open the conversation in a digital society and things do need to change – but does this truly reflect what’s going on in the hearts and minds of the people using Google to gather information? Are we all really to blame, or is this a case of the minority being able to hijack public thought and choice, with the shock value attached to it being the only reason it gains momentum?

What I’m ultimately asking is – is Google Autocomplete worth it? Is it right to stir the pot so vigorously for the sake of transparency and freedom of speech? As always I welcome your comments below on this issue;

Leave a Reply