By far one of my most used tools, URL Profiler makes website analysis and data curation much easier. By allowing you to upload a list of URLs linking a number of website analysis accounts such as Copyscape and Majestic, it enables you to pull through vast amounts of data. After countless hours of using this tool and discovering ways of combining different functions to retrieve different data sets, I share with you 40 ways to use URL Profiler.

This post is divided into eight key sections, highlighting ways you can best leverage URL Profiler in your analytical and decision-making processes:

- Link Prospecting

- Content Review

- Social and Contact Data

- Backlink Analysis

- Competitor Research

- Technical Review

- Mobile Review

- Domain Analysis

Link Prospecting

1. API Keys

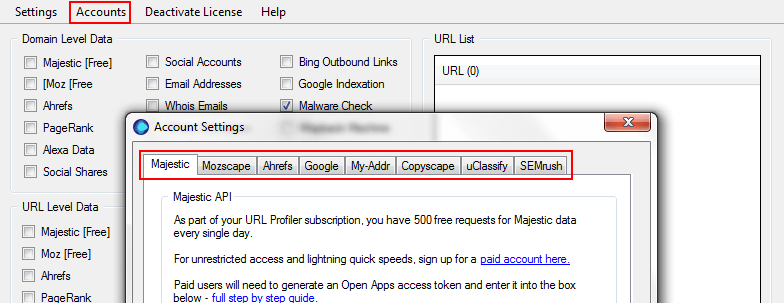

By plugging in your API keys for link analysis tools, including Moz, Majestic and AHREFs, you can pull through more link data all in one place. Under the Accounts section, you can set up API connections with external tools, which only needs to be done once. You then can tick the relevant boxes when you run your links through the tool and export link data in one spreadsheet.

2. URL and Domain-Level Metrics

Sometimes it’s useful to learn metrics at a URL level (such as to understand the value of a link on a specific page), whereas sometimes you may be more interested in domain-level metrics (such as to decide if it would be beneficial to outreach to a particular site). With URL Profiler you can choose to export URL-level and/or domain-level data in the same crawl, using the options in the different sections.

3. Topical Link Prospecting

Along with collating authoritative data about a link, it’s also useful to understand a domain’s relevancy to your website. Running a list of prospect links through URL Profiler using uClassify and Majestic option will provide topical link data, highlighting any websites deemed relevant to your website based on its content.

The export you receive highlights what topics best match the content on each domain, helping you to quickly refine a list of potential websites you wish to outreach to.

4. Review Referral Sources

Assuming we have a list of websites we wish to outreach to with the aim of being featured on their website for relevant referral traffic, we want to identify the value of outreaching to them. Using both SEM Rush and Alexa Rank options at domain level, we’re able to gather a rough understanding of a website’s standing by collecting organic and paid traffic data whilst being able to identify the quality of the domain (which is often a reflection of the quality of traffic).

This information will help us to make informed decisions about the domains we wish to outreach to, saving time and resources.

5. Outreach Contact Information

Do you have a list of domains you wish to outreach to for link-building or content-creation purposes at hand? If you’re contacting a business, look for a team page and input those URLs whilst using the ‘Email Addresses’ and ‘Social Accounts’ options at URL Level. If displayed on the website, here you’ll be able to attain individual emails and personal social profiles rather than just business profiles, allowing for more effective outreach.

Even if you do not use all the contact details scraped, you can use this information to begin building a personal black book of relevant industry contacts for future activity.

Content Review

6. External Duplicate Content

By using the Copyscape API, you can run a number of URLs through URL Profiler to carry out duplicate content checks in bulk. You can do this directly through the Batch Search in Copyscape but by doing so in URL Profiler you can run numerous checks at the same time (i.e., backlink checks, domain checks, etc.)

7. Internal Duplicate Content

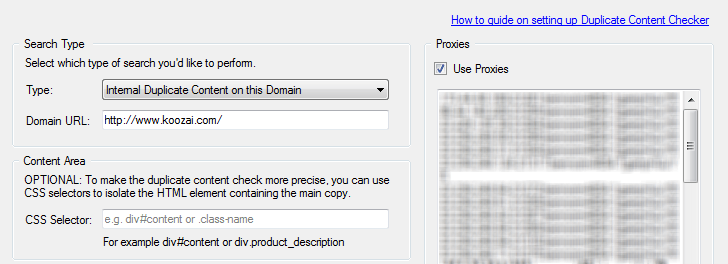

Along with the Copyscape API for external duplication issues, you can also check for internal duplication issues. To do this, firstly input a list of internal URLs to be checked in the URL List then select the ‘Duplicate Content’ option, where a new window will open.

In this window, you want to change the search type to be internal checks and then specify the domain URL:

Although URL Profiler is smart in identifying the ‘real’ content area to be checked on a URL (such as the product description or the main body of text), they’re the first to admit that it’s not perfect and so it’s best to use CSS Selectors to help the tool determine what elements of HTML are to be checked.

For more information on CSS Selectors and using them in URL Profiler, see the following blog post.

8. Thin Content

The data pulled from duplicate content checks also highlights the word count for each page, enabling the quick identification of pages with little content. This can often be an unnecessarily long task and, given the importance of increased on-page relevancy, this can speed things up considerably.

9. Identifying Popular Pages

Finding what subject and type of content works with your audience is a process of trial and error, but with effective analysis of your current content, you can identify trends in your content.

You’re able to review a large list of blog posts at once with URL Profiler, making content analysis a quick process with key findings. Using a combination of options such as social shares (URL-level data), Google Analytics and readability, you can effectively gauge individual page popularity through social networks (excluding Twitter), look at how users behave on each post and identify blog themes, allowing for easy analysis.

10. Real Traffic Data through Google Analytics

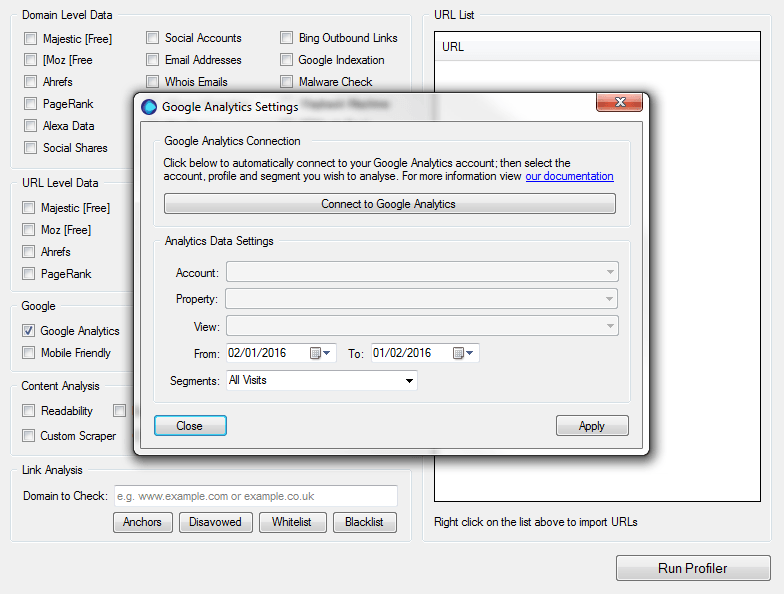

URL Profiler can be used to pull in Google Analytics data for any number of URLs. This is done by connecting directly into a Google Analytics profile by selecting the ‘Google Analytics’ option.

This function pulls through a range of useful traffic statistics for specified URLs, including bounce rate, average time on page and sessions, which can help to identify unnatural user behaviour or to gather general insight across page performances.

11. Keyword Extraction

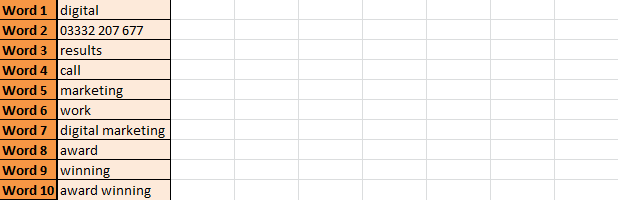

The word list generated by using the ‘Readability’ option helps to identify what certain pages are about and to speed up content audits. It also helps to understand the on-page relevancy for certain keywords you’re trying to target and can highlight areas for increased keyword relevancy.

So when using https://www.koozai.com/ as the URL to test, we are given the following keyword extraction:

This is done by viewing the HTML of a page in its entirety, but you can further refine the content being reviewed by using CSS content selectors.

12. Keyword Search Volume

Using Google’s Search Analytics API, URL Profile is able to collate (provided) keyword data for each URL inputted. Using the Search Analytics option, signing into Search Console and selecting the property relevant to the URLs inputted, you can export average CTR, total impressions and clicks along with total ranking keywords data for each URL.

More data is also given including the top 10 keyword queries and click metrics for each URL, giving you real insights into keyword performance.

13. Keyword Opportunities

For extra depth in the audit of current keyword performance, you’re also able to identify keyword opportunities for website growth. By using the same report as the above action, navigate to the ‘Keyword Opportunities’ tab; here, you can see keywords you already rank for, as well as the position, search volume and other metrics.

This report helps to highlight keywords that a URL is already ranking for but that, with some refinement, can really bring in those noticeable wins along with keywords a page may wish to start actively targeting.

14. Content Readability

Further uses of the statistics provided from the ‘Readability’ option are the content readability metrics. These help you to understand the content and language used in greater detail. It’s easy to become blind to the tone of content you’ve created, and this option helps to highlight interesting metrics such as sentiment score and Dale-Chall Score to deliver real insights into how your content is being read. This can also be used as an indicator of how search engines may be reading your content.

Another option you can use for further content insight within URL Profiler is uClassify, which provides similar data to the ‘Readability’ option along with other factors such as tonality.

15. Duplicate Pages

As well as offering an understanding of how your websites content reads, the ‘Readability’ option also provides data that helps to identify entirely duplicated pages. The hash column in a readability report lists a snippet of text that represents the content on a certain URL.

When finishing running multiple URLs through a readability report, once the file is opened, apply a conditional format to highlight duplicate values in the hash column. Anything that is highlighted as a duplicate is very likely a duplicated page.

Social and Contact Data

16. Email Address Extraction from URLs

You can extract email addresses from key contact pages using the ‘Email Addresses’ option under URL settings. This allows you to build a list of contact details for content marketing outreach and relationship building. Simply paste the contact pages of all the sites you would want to contact, and if they contain an email address this value will be extracted into your export.

17. Social Accounts

Collating a record of social accounts was once a lengthy task, but URL profile speeds it up massively. By using their ‘Social Accounts’ option and adding a list of websites you want social account details for, you’re able to quickly scrape all social profiles link to from a website at domain and/or URL level.

18. Social Profiles Scraping

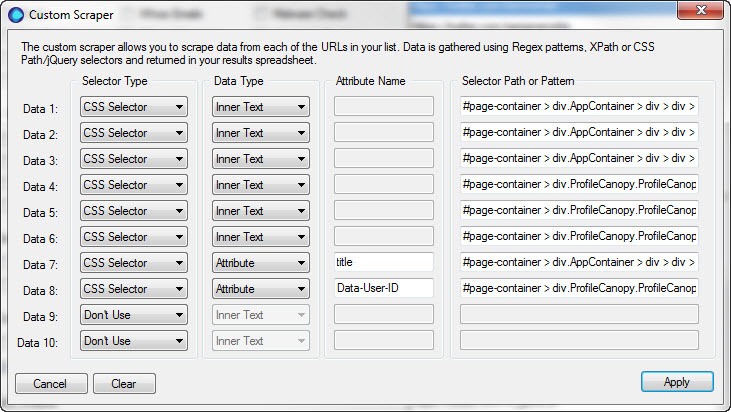

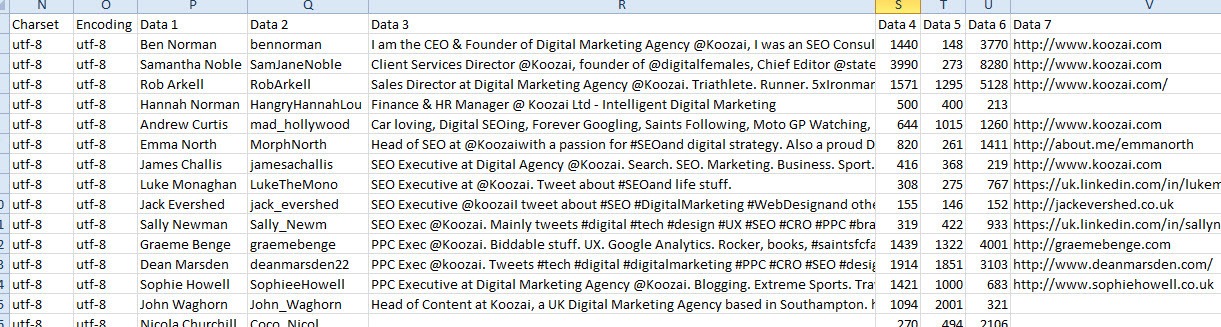

Whether it’s to keep record of a competitor’s social growth or activity or to simply obtain details of potential outreach contacts, URL Profiler enables you to scrape social profiles using the ‘Custom Scraper’ option.

Let’s assume you’ve undertaken the above task and now have a list of profiles from Twitter (the example used in their blog post). Paste these into the URL List module. The next step is to select ‘Custom Scraper’ and define what you want to scrape; this is done by outlining the data points you want to look for:

Defining each of the four elements will help the tool understand what data you want to scrape from each URL inputted. For more information on how to determine the information you provide, click here.

This activity can be undertaken across other social profiles as long as the structured data is consistent across the differing URLs, as is the case with Twitter. Once the tool has finishing running, your export will look something like this:

Backlink Analysis

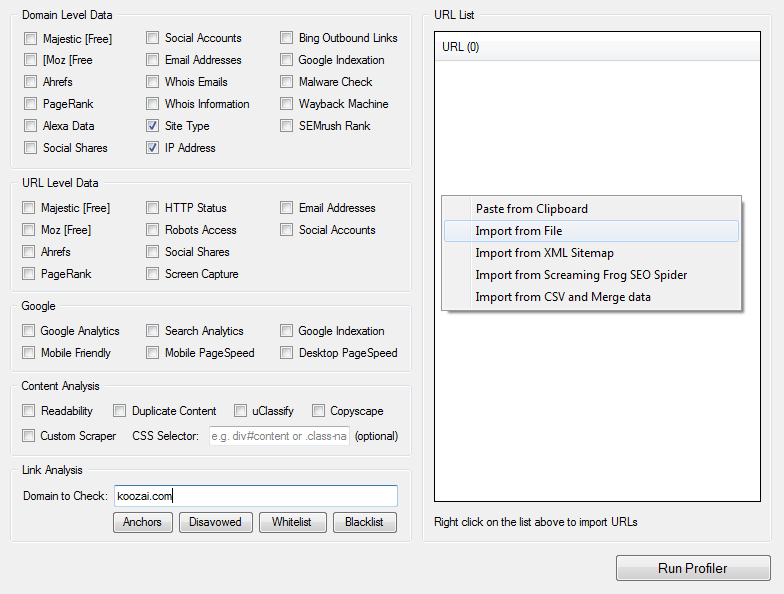

URL Profiler enables you to effectively review a large number of inbound links in a short space of time simply by looking into anchor text, site types and IP information.

Firstly, you want to export raw data files from any of the supported reporting tools (including Majestic, Open Site Explorer and Google Search Console), then import from File into URL Profiler and the tool will merge and remove any duplicates (magic!).

The following five points all are part of the link analysis process, as outlined on their blog.

19. Anchor Text Review

The abuse of keyword spamming anchor text has resulted in Google’s cracking down on spammy use of keyword anchor text to manipulate rankings. As such, it’s important to ensure that your link profile does not contain an unnatural balance of keyword-optimised anchor text vs branded, natural text.

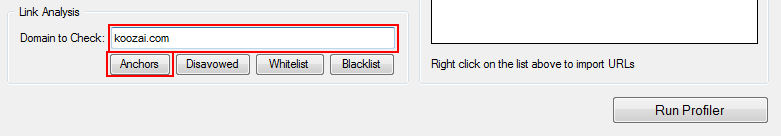

URL Profiler allows you to quickly review a large number of links, classifying the anchor text per URL. To review anchor text links across all the inputted URLs, you want firstly to enter the domain you’re checking against and then define the anchor text you’re looking for with the tool by clicking the ‘Anchors’ button:

When using the ‘Anchors’ function, a window pops up with the following three tabs:

- Branded – web address and brand spelling variations

- Commercial – text that may look spammy, e.g. SEO, free links

- Generic – standard descriptive anchor text, e.g. click here, now, website

You want to ensure you’re as comprehensive as possible when adding anchor text variants to these tabs to allow for easy analysis once the tool has finished running.

20. Unnatural Pattern Identification

Although not a clear warning signal, a domain sharing an IP address and C Block with a large number of other domains is always worth reviewing as there’s a possibility for a link network.

For a quick review of large numbers of domains, combining the option ‘Site Type’ and ‘IP Address’ and Anchor Text Review, you can quickly analyse a large number of inbound links by looking out for certain signals:

- The number of domains on one IP address

- The site type for a certain domain paired with those on the same IP address

- The site type exclusively (link directory, etc.)

- The type of anchor text as defined in the Anchor Text Variations window.

21. Link Scoring

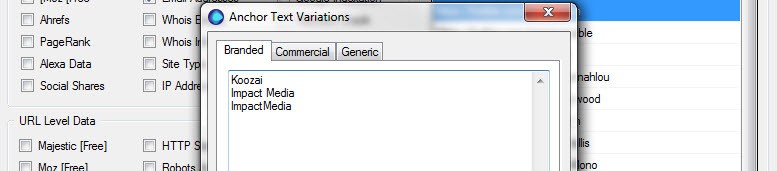

The export you receive when running the tool following the above process will provide you with multiple sheets. Firstly, the following overview, detailing different data sets such as anchor types, link types and the location of the link on the website:

In Sheet Two, you can find detailed analysis of each URL inputted into the tool with data including link score, link score reasoning, anchor text information and much more. With this information provided, you’re able to undertake very informed analysis of external backlinks, validating the data provided.

22. Malware Check

A further check you can undertake to identify unnatural, low-quality referring domains is identifying whether a domain appears on Google’s blacklist. Using the ‘Malware Check’ option against your list of URLs will highlight any domains suspected by Google of being a malware or phishing website.

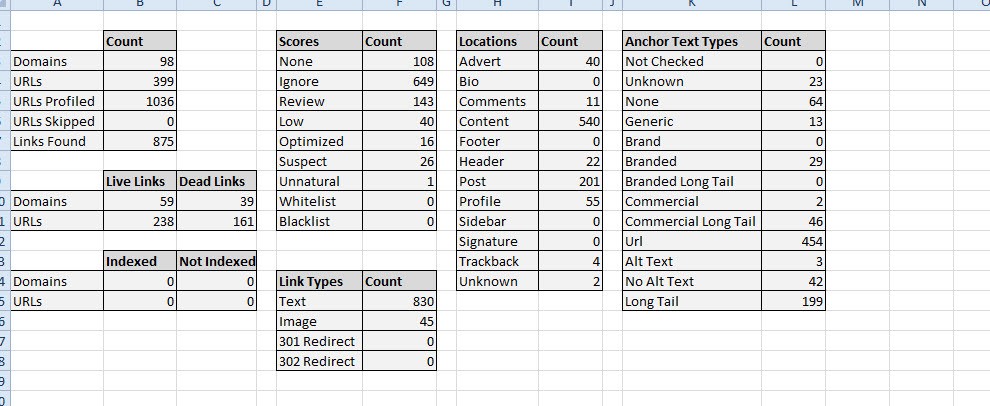

23. Whitelists and Blacklists

From the exports following link analysis, you will want to make use of their domain white lists and black lists. These lists allow you to input specific domains, with white being the links you know are fine and black being the links you know are toxic. Remembering to update these lists each time you undertake link analysis will help you to save time on future work.

24. Gathering Contact Information

Once you’ve analysed the links and have identified a list of domains you wish to outreach to for link removal, you’re able to quickly attain all contact information needed (if available). Simply add the list of links to the URL List module, select the ‘Email Addresses’ and ‘WHOIS Emails’ option and you’re good to go.

Competitor Research

25. Site Traffic and Popularity Checks

Using multiple options including Alexa Data and SEMRush Rank, you’re able to determine a competitor’s website popularity by looking into organic and paid traffic, the paid keyword they’re targeting along with multiple Alexa ranking metrics.

Although the data provided may need to be taken with a pinch of salt, it still acts as a data source for further research and is a starting point for determining a competitor’s share of online traffic in your market in comparison to your website.

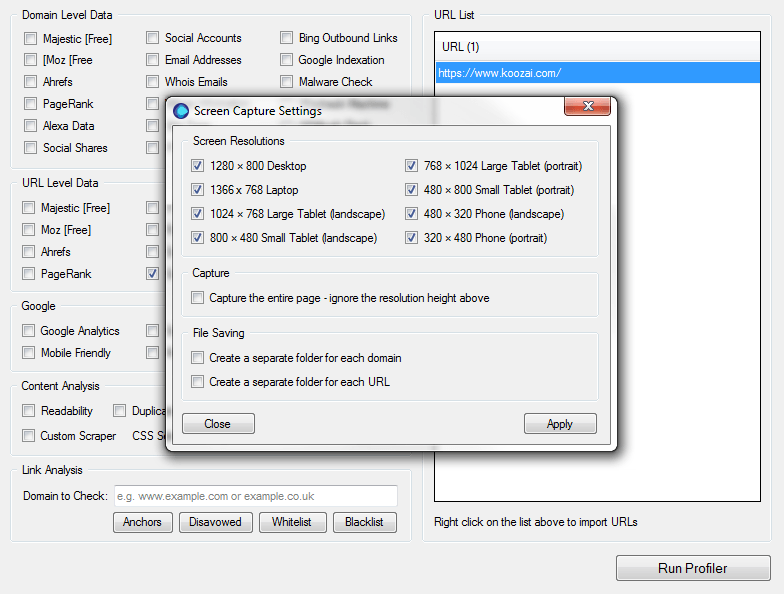

26. Competitive Screen Capture

Seen a change in rankings that’s led to a competitor climbing a number of places for a primary keyword? Appearing to be losing custom through your website? There can be many different reasons why this is happening, one of which is a competitor making on-page changes.

URL Profiler allows bulk screen capture, helping you to keep a visual record of a competitor’s website on desktop, tablet and mobile that you can refer back to for analysis should you need to at a later date.

Technical Review

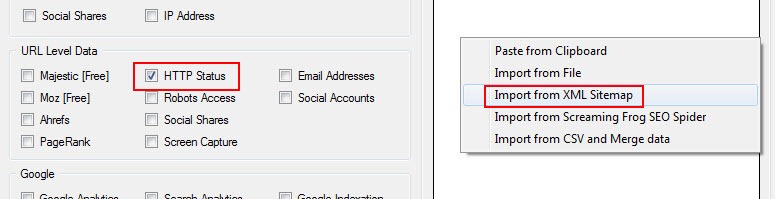

27. XML Sitemap Validation

You can import the URLs from an XML sitemap and select the ‘HTTP Status’ option to check that each of the URLs in your sitemap returns a 200-header response code. This allows you to identify any broken links, redirected URLs or other crawl errors included within your sitemap. This might highlight a bigger issue if you’re using a dynamic sitemap, as these sorts of issues should be resolved automatically when you make changes to URLs. This can help to identify issues with your sitemap that you may need to investigate further.

28. Broken and Redirect Link Clean-up

Similarly, by running your site’s URLs through URL Profiler, you can select the ‘HTTP Status’ option to identify URLs which are broken (404) or passing through a redirect (301/302). By updating the internal links on your site to point directly to live destination ages (returning a 200-header response code) you can speed up the site both for users and for search engine crawlers, helping to conserve valuable crawl budget.

29. Identifying Indexation Issues

By checking the ‘Google Indexation’ option, you can run URLs and XML Sitemaps through the tool and root out indexation issues. This often highlights some discrepancies which can trigger further investigation. Are there key pages that you would want or expect to be indexed which aren’t? What could be causing this?

30. Google PageSpeed Performance Checks

You can use URL Profiler to check both desktop and mobile PageSpeed scores of pages in bulk. This allows you to see at a glance which pages have notably poorer scores and may require specific attention. Do the pages with speed issues contain unnecessary use of video, script or oversized images? A number of factors could be causing individual pages to load more slowly than others, but addressing these issues could have a big impact on the search exposure for those pages.

31. Internal Linking Review

Internal linking is a major factor in SEO; it helps a website to be effectively crawled whilst allowing for link juice to be passed through pages. A well-established site structure with strong internal linking can really improve the visibility of key pages on a website.

When running a list of URLs using the URL Level Majestic option, you are able to view the number of internal links pointing to individual URLs. By sorting internal links per URL from smallest to largest in the export, you are able to clearly identify pages that need greater internal linking.

32. Robots Access

Although possible through Google’s Search Console, as is the case with many of the points mentioned in this post, URL Profiler makes it a much quicker process. Check if certain links are blocked from being crawled by a robots.txt file by using the ‘Robots Access’ option. This allows you to identify any crawlability errors through incorrectly blocked pages through robots.txt, Meta robots data and any X-Robots-Tags. Along with this, you can also identify rel=”canonical” tags in place, helping to give you a true picture of a URL’s accessibility.

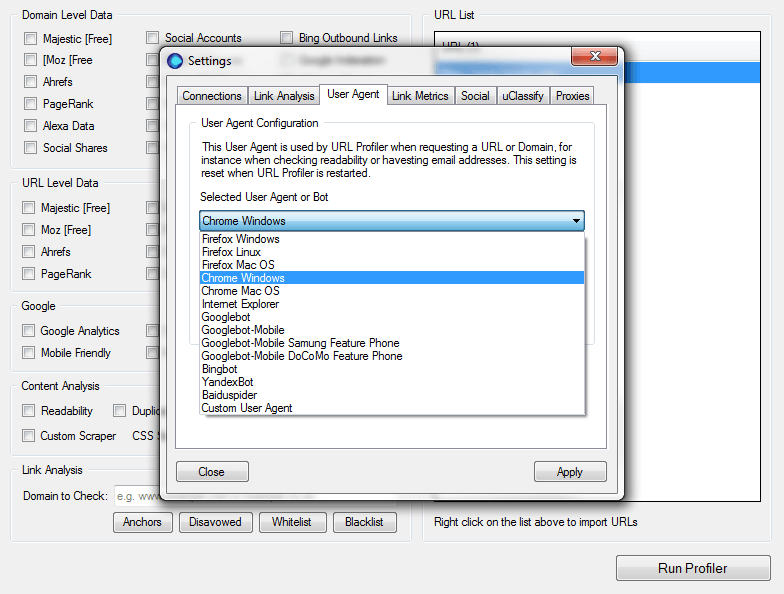

33. Crawlability per User-Agent

Undertake tests to identify the crawlability of your website per user-agent and see whether each is able to access certain URLs. Specifically setting out to control a certain user-agent from accessing a page and wish to check if this is correctly in place? Wanting to make sure your website is crawlable by all? Either way, by navigating to ‘Settings’ then to the ‘User Agent’ tab, you’re able to select from a list of popular user agents or specify a custom agent by inputting the user agents HTTP Request.

34. Broken Link Prioritisation

When faced with a large number of broken links, it can often be difficult to decide where to start and with what links. Although you would want to ensure all broken links point to the most relevant live source, in a situation where you’re faced with thousands of these issues, you want to identify the pages with the most SEO value.

Input your list of broken links on your website (using Google’s Search Console data and any data from a crawler) then use the URL-Level Data Moz option. The export you receive once the tool has finished will highlight Page Authority for each URL; this is the metric you want to work with, starting with the highest URLs first then working your way through.

35. Page Value Review

Understanding the value of a page to your website is crucial in truly being able to continually refine a website, building upon what’s already in place.

Using the Moz and Majestic options at URL Level and inputting URLs you wish to review (top-level pages, services pages, blog posts, etc.), the export will tell you the strong and weak pages across your website. Reviewing both Page Authority and the number of external links pointing to a page will highlight any noticeable differing values.

Mobile Review

36. Mobile-Friendly Tag Eligibility Checks

Rather than checking pages individually using the Google Mobile Friendly Testing Tool, you can use URL Profiler to test many pages at once. If you check only your homepage, you may be missing issues with deeper pages that are not being seen as mobile-friendly. When you find out which pages have issues and what the issues are, you can review these pages individually to fix the issues and work towards making your site more fully mobile-friendly.

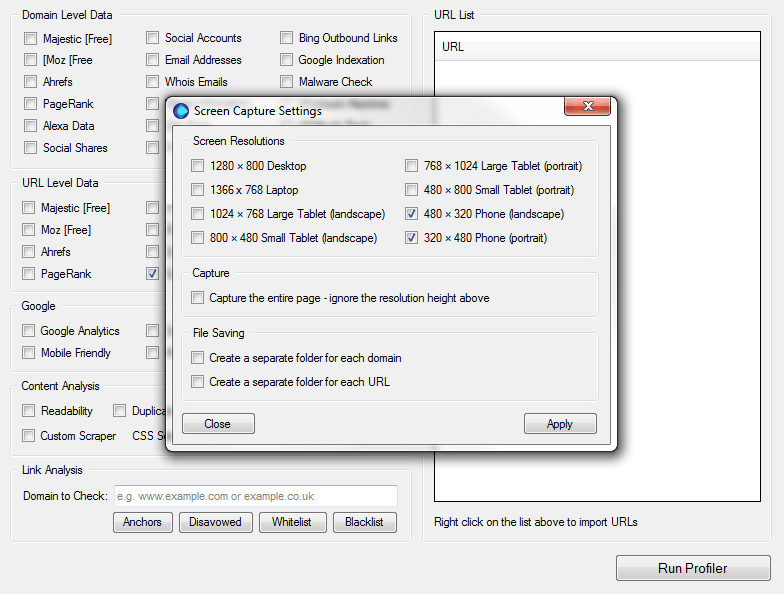

37. Mobile Website Design Test

Although websites can be considered mobile-friendly by Google, sometimes certain on-page elements respond to screen sizes incorrectly and can make it difficult for users to interact with the website. These issues may be buttons falling underneath other buttons, images not correctly reducing and rearranging or contact forms not being entirely visible.

The screen capture option enables you to simulate how a website is viewed on a mobile device (both portrait and landscape), helping to identify any on-page element issues.

Domain Analysis

38. Website Reputation

Gaining an understanding of how a domain is viewed in the eyes of Google can often indicate the state of the website’s reputation. The last thing you would want to do is to buy into a domain that is blacklisted.

Using a combination of the ‘Google Indexation’ and ‘PageRank’ options, you can gain a relatively strong idea of how a domain is seen by Google. If a domain isn’t indexed and doesn’t have a good PageRank either, it’s a clear signal that something is wrong and you may want to dig a little deeper.

Although it’s worth bearing in mind that PageRank is now an outdated metric, it can still be used as a guide for understanding a domain’s relationship with Google. You can further this analysis by combining other options such as Moz for further insight into domain reputation through Domain and Page Authority metrics.

39. Domain Prospecting

Buying a domain can often be a technical process and research is needed. Using the range of options available, you’re effectively able to determine the history of a list of prospect domains, enabling you to make informed decisions when purchasing.

Some important options to use when checking the status of a domain are:

- WHOIS Emails: Provides you with details of all emails associated with the domain.

- WHOIS Information: Provides a detailed WHOIS record of the domain, including Expiry Data, Registrar and contact details.

- Wayback Machine: Highlights the number of entries made to archive.org’s database along with the date of the first and last.

40. Bing Outbound Links

Using the linkfromdomain: search operator that is entirely unique to Bing, URL Profiler can identify the number of pages for a specified domain indexed by Bing and the number of outbound links. This is useful for understanding the quality of outbound link building undertaken and for identifying an average outbound link per page ratio.

This can also be used to identify strong links your competitors are referring to on their website that may be relevant as a resource for your users.

Conclusion

The number of tasks this tool is capable of undertaking is incredible and the above list, although extensive, is only part of what it can do. Play around with this tool and find what works best for you.

Feel free to contact me if you have questions about anything I’ve discussed or if you want to share the different ways you’re using URL Profiler; I’d love to hear them!

Leave a Reply